Signale Whatsapp AI

A WhatsApp assistant that listens to your voice notes, reads your photos, and organizes reminders and notes. All inside your favorite chat app.

Overview

Signale is a personal assistant that lives inside WhatsApp. You can send it voice notes, photos, or text messages, and it will organize your notes, set reminders, and even add events to your calendar. Think of it as a "second brain" you can talk to without leaving your favorite chat app.

This project was a high-velocity engagement for a venture firm, designed to validate a consumer AI idea. In just 3 months, we went from zero code to a production system used by thousands of beta testers.

The Experience

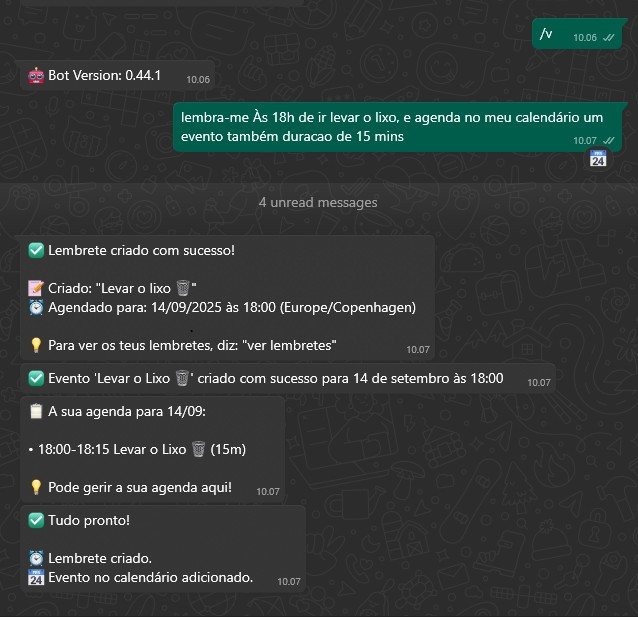

"Remind me at 6pm to take out the trash, and schedule a 15 min event on my calendar."

Bot Response (Translated 🇺🇸): "Reminder created successfully! Created: 'Take out trash'. Event 'Take out trash' created successfully for Sept 14th at 18:00."

The Challenge

The objective was aggressive: build a robust, scalable, multi-modal AI platform capable of handling real-time WhatsApp traffic, and launch it to a waitlist of thousands - all within a 3 month engagement period.

Key Technical constraints:

- Multi-Modal: Must handle text, voice notes (Audio-to-Text), and images (Vision AI) seamlessly.

- Reliability: WhatsApp users expect instant responses; downtime is not an option.

- Scale: The backend needed to auto-scale from 0 to N based on webhook traffic spikes.

The Solution: Event-Driven Microservices

We architected a solution natively on Azure, leveraging containerization and event-driven patterns to decouple heavy AI processing from the real-time chat loop.

1. Architecture & Tech Stack

- Core API: FastAPI with LangGraph for agent orchestration.

- Async Processing: Azure Service Bus completely decouples ingestion. When a user sends a voice note, the API acknowledges instantly, while a background worker processes the audio.

- Compute: Azure Container Apps (Serverless Containers) allowed us to scale workers strictly based on queue depth (KEDA scaling), optimizing cost and performance.

2. Multi-Modal AI Pipeline

- Audio: Dedicated microservice consuming from Service Bus, utilizing OpenAI Whisper for near-perfect transcription of Portuguese voice notes.

- Vision: Dedicated microservice utilizing Gemini Flash for high-speed image analysis.

- Text: LangChain/LangGraph agents handling intent classification (Reminders vs. Notes vs. Chat).

Visual Intelligence

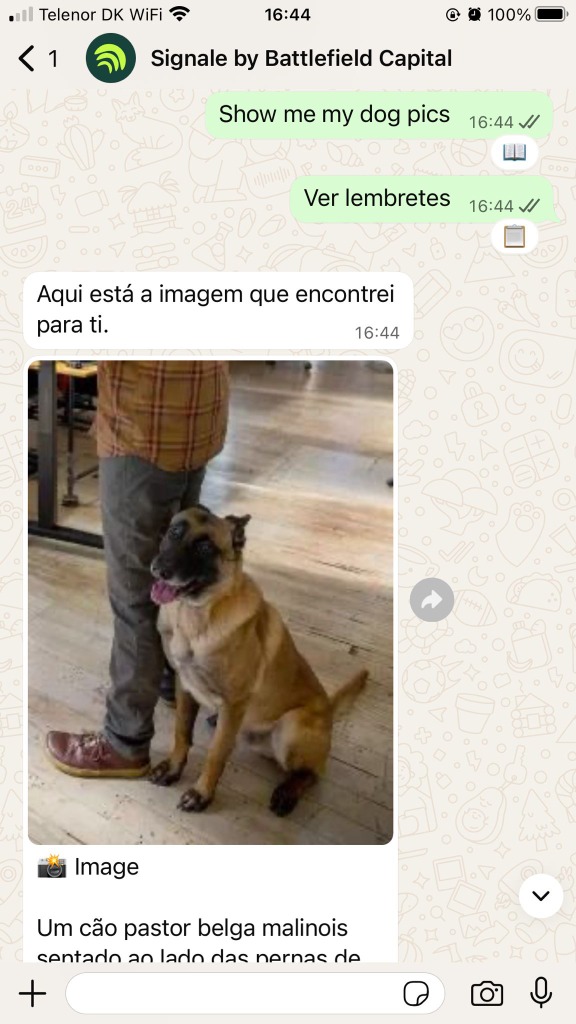

We integrated Gemini Flash to allow users to "search" their life visually.

"Show me my dog pics" - Signale performs semantic search over the user's uploaded media database to retrieve contextually relevant images.

Voice Intelligence

Users can simply talk to Signale. We process voice notes in real-time to extract structured data.

User Audio (7s): "Add banana, apples, and beer to my supermarket shopping list."

Bot Response (Translated 🇺🇸): "Note saved: Supermarket Shopping List. • banana • apples • beer"

Conversational Reminders

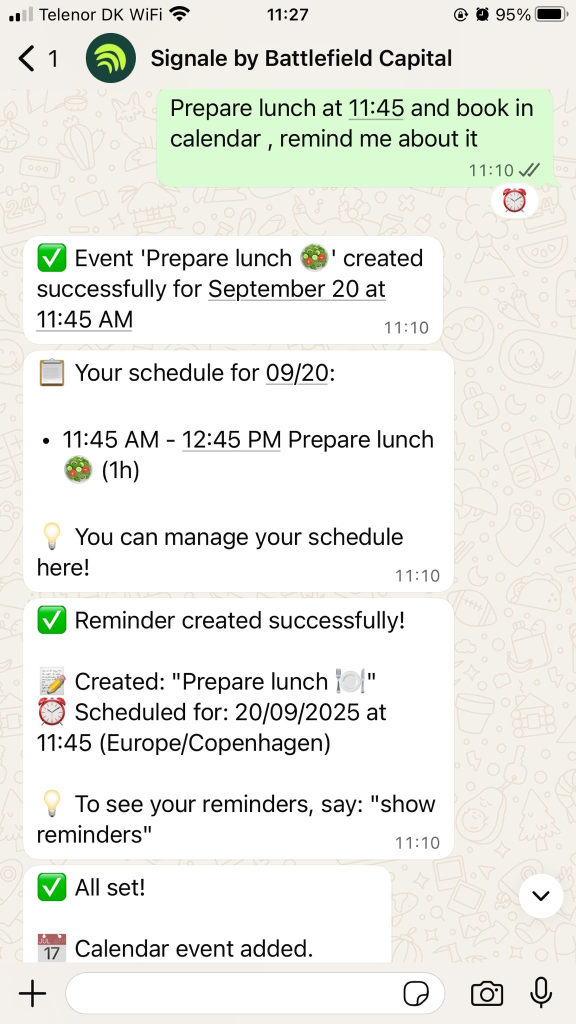

The agent understands natural language time concepts and handles complex, multi-intent instructions.

1. Multi-Intent Instruction

"Prepare lunch at 11:45 and book in calendar, remind me about it" - One prompt, two actions:

- Creates a Google Calendar event.

- Schedules a WhatsApp reminder.

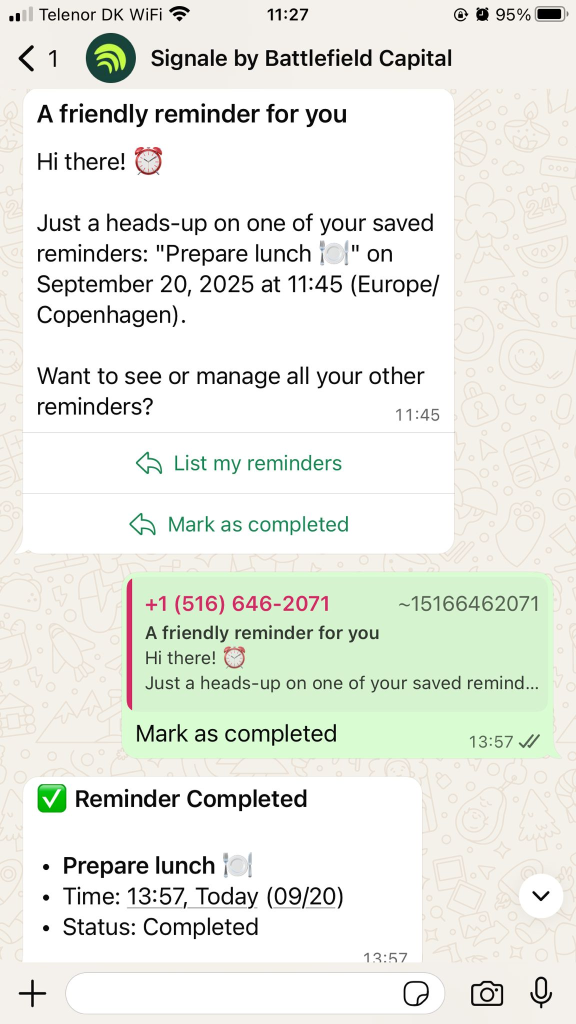

2. Interactive Completion

When the time comes, Signale sends a notification with interactive buttons. Users can "Mark as completed" directly in the chat, closing the loop.

3. Advanced LLMOps

We moved beyond simple prompt engineering to build a deterministic cognitive architecture.

Concept-Driven Intent Analysis Instead of fragile keyword matching, we built a Semantic Decision Engine that analyzes user intent based on "Mental Models". The agent determines if the user is interacting with:

- The Database (Note-taking)

- The Calendar (Scheduling)

- The Clock (Reminders)

- The Conversation (Chat)

This "Object-Oriented" prompt design allowed us to handle complex, multi-intent queries that stumped traditional classifiers.

Type-Safe Generation

We utilized Pydantic AI (using the StructuredLlmAgent pattern) to guarantee that every LLM output adheres to strict schemas. This prevents "hallucinatedJSON" errors common in untyped LangChain implementations.

4. Application Quality: Specification-Driven Testing

We treated possible conversational states as integration tests.

- 10-Point UX Specification: A rigorous test suite defining behavior for "Ambiguous Inputs", "Mixed Language", and "Implicit Context".

- Golden Path Verification: Every release was vetted against a suite of 250+ integration tests covering real-world scenarios (e.g., "The Shopping List Test", "The Cancelled Meeting Test") to ensure no regression in personality or logic.

5. Resilient System Design

The system handles thousands of concurrent Webhook events through a robust worker pattern (e.g., ImageProcessor):

- Dead Letter Queues: Failed messages are isolated for analysis, never blocking the pipe.

- Exponential Backoff: Transient errors (Azure API rate limits) are handled gracefully.

- Graceful Fallbacks: If Blob Storage cache misses, the worker seamlessly falls back to fetching fresh media from the WhatsApp API.

6. Production Engineering

We didn't just build a MVP; we built a production system.

- CI/CD: Full GitHub Actions pipelines building multi-stage Docker images and deploying to Azure revision targets.

- Infrastructure as Code: All resources managed via Terraform/Bicep concepts (reproducible).

- Monetization: Seamless Stripe integration for subscription management within the Next.js web dashboard.

Note: DevOps Highlight: Zero-Downtime Deployments We implemented a Blue/Green Deployment strategy using Azure Container Apps revisions. New versions of the AI agent are spun up and health-checked before receiving traffic, ensuring that users never experience a broken chat session during updates.

Key Outcomes

- Speed to Market: Delivered a fully functional, monetized platform in under 90 days (Concept to Cash).

- Elastic Scale: Validated architecture handling hundreds of concurrent users with zero manual intervention or performance degradation.

- Cost Efficiency: Serverless event-driven design maintained less than $5/month idle costs while supporting infinite burst capacity during marketing pushes.

Signale demonstrates that with the right architectural choices (Microservices + PaaS), a small engineering team can deliver "Big Tech" scale and reliability in startup timelines.